Building High Performing EMC ScaleIO based Hyper-converged Environments

April 30, 2016 Leave a comment

Introduction

EMC ScaleIO is a software-based solution that aggregates storage media (spindles, SSDs) in servers to create a server-based SAN. It is built on vSphere hosts by deploying ScaleIO software in vSphere hypervisor and in linux-based VM running on each host. This allows the vSphere hosts to provide both storage and computing to the Virtual Machines (VM) running on them. This converged environment is called a HyperConverged Infrastructure (HCI).

Benefits

ScaleIO HCI offers several advantages over traditional SANs. Some of the key benefits are listed below:

- Converges compute and storage resources of commodity hardware into a single-layer in vSphere environments

- Combines HDDs, SSDs, and PCIe flash cards to create a virtual pool of block storage

- Creates a massively parallel and insanely scalable (both capacity and performance) storage system.

- Enables performance to scale linearly with the infrastructure (as more servers with storage are added).

Memory based Acceleration in ScaleIO HCI

The I/O latency offered by ScaleIO based HCI can be significantly lowered using server DRAM and PernixData FVP Software. FVP aggregates the DRAM in the servers that are part of a ScaleIO HCI and creates a massively parallel, linearly scalable data tier (referred to as Distributed Fault-Tolerant Memory [DFTM]) that can be used to accelerate data accessed frequently by the VMs and new data written by the VMs.

This new accelerated hyperconverged infrastructure was evaluated in a lab on a 4-node ScaleIO HCI shown in Figure 1.

I/O Performance

Here is a snippet of the I/O performance of the new ScaleIO stack with DFTM.

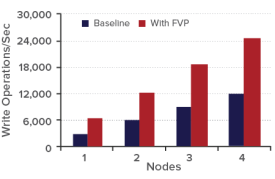

The workload used for the tests observed 8x increase in read operations/sec and 1.2x increase in write operations/sec per ScaleIO node. As the HCI scaled (new nodes were added), the performance increase due to I/O acceleration by DFTM scaled proportionally. With 4 nodes, the read and write operations/sec reached significant proportions touching 150K mark for reads and 25K mark for writes.

Why ScaleIO HCI with DFTM is interesting?

FVP decouples I/O performance of the converged infrastructure from its capacity. While administrators retain all the benefits of ScaleIO, they can manage the converged infrastructure’s I/O behavior independent of the underlying commodity hardware. Even if the hardware components vary from node-to-node in a cluster, the I/O performance experienced by the VMs will remain consistent and agnostic to the physical characteristics of the components.

FVP serves as a single data tier for both reads and writes. This means that both reads and writes from VMs observe similar I/O latencies. When the latencies are similar, the rate of operations become similar.

Another interesting feature of this new architecture is that every accelerated VM gets large, high-speed buffers for writes. Unlike a shared storage, where the high-speed buffers (storage cache) are shared across all the VMs connected to the storage, FVP provides equal buffer chunks to all the VMs. Performance of this buffer can be changed easily by changing the underlying high-speed media (from SSDs to DRAM) and the number of VMs utilizing the write buffers can be increased by deploying high-speed media with larger capacities.

You can find more details about the architecture, experiments and results in this white paper. Feel free to leave your comments/questions here.